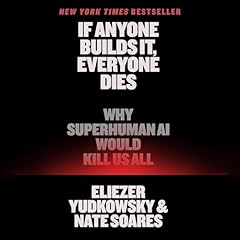

If Anyone Builds It, Everyone Dies

The Case Against Superintelligent AI

No se pudo agregar al carrito

Add to Cart failed.

Error al Agregar a Lista de Deseos.

Error al eliminar de la lista de deseos.

Error al añadir a tu biblioteca

Error al seguir el podcast

Error al dejar de seguir el podcast

Obtén 3 meses por US$0.99 al mes

Exclusivo para miembros Prime: ¿Nuevo en Audible? Obtén 2 audiolibros gratis con tu prueba.

Exclusivo para miembros Prime: ¿Nuevo en Audible? Obtén 2 audiolibros gratis con tu prueba.

Compra ahora por $16.83

-

Narrado por:

-

Rafe Beckley

Brought to you by Penguin.

An instant NEW YORK TIMES bestseller

** A Guardian biggest book of the autumn **

AI is the greatest threat to our existence that we have ever faced.

The scramble to create superhuman AI has put us on the path to extinction – but it’s not too late to change course. Two pioneering researchers in the field, Eliezer Yudkowsky and Nate Soares, explain why artificial superintelligence would be a global suicide bomb and call for an immediate halt to its development.

The technology may be complex but the facts are simple: companies and countries are in a race to build machines that will be smarter than any person, and the world is devastatingly unprepared for what will come next.

Could a machine superintelligence wipe out our entire species? Would it want to? Would it want anything at all? In this urgent book, Yudkowsky and Soares explore the theory and the evidence, present one possible extinction scenario and explain what it would take for humanity to survive.

The world is racing to build something truly new – and if anyone builds it, everyone dies.

'The most important book of the decade' MAX TEGMARK, author of Life 3.0

'A loud trumpet call to humanity to awaken us as we sleepwalk into disaster - we must wake up' STEPHEN FRY

© Eliezer Yudkowsky and Nate Soares 2025 (P) Penguin Audio 2025

Los oyentes también disfrutaron:

Reseñas de la Crítica

Las personas que vieron esto también vieron:

As for the overall line of argument in the book, at no point did I feel it was mistaken or forced. At a few points, I anticipated more details than were actually provided, but I see that there are extensive additional background materials available online: https://ifanyonebuildsit.com/resources

The writing is generally clear and hard-hitting. I wonder if some of the strength of various parables will fly over the heads of some readers. But I hope to be pleasantly surprised by what politicians (and their advisors) actually take away from reading the book.

On checking online reviews, it's evident that not every reader is persuaded. Looking more closely at these negative reviews, I suspect these critics have read IABIED in only a cursory manner, searching for points they can cherry pick to bolster their pre-existing prejudices.

In conclusion, I encourage *everyone* to take the time to read the book in its entirety, and to savour its arguments. No topic is more urgent than figuring out how to avoid ASI being built using current methods and processes. That's the case IABIED makes, and it makes it well.

(By the way, I found the narrator to be excellent.)

Full of important arguments, examples, and parable

Se ha producido un error. Vuelve a intentarlo dentro de unos minutos.